Data Integrity, Why Do You Need It?

Data Integrity, Why Do You Need It?

What is Data Integrity?

Many of us may remember playing a game as a child, commonly referred to as Telephone, where everyone would sit in a circle with the sole responsibility of passing along a message to the next player. The goal of this game was to successfully pass the original message back to the first player without any changes to the original message. If your experiences were anything like mine, you would agree that the final message rarely made it back to the first player in the same state that it left in. In some cases, the final message was so far from the original that it would induce laughter throughout the whole group. Although this game was supposed to provide laughter and enjoyment during our childhood, it was also a good teaching moment to reinforce the importance of detail and attention. This exercise is a simple demonstration of the importance of data integrity and communication and their reliance on each other.

Data Integrity in the Process Industry

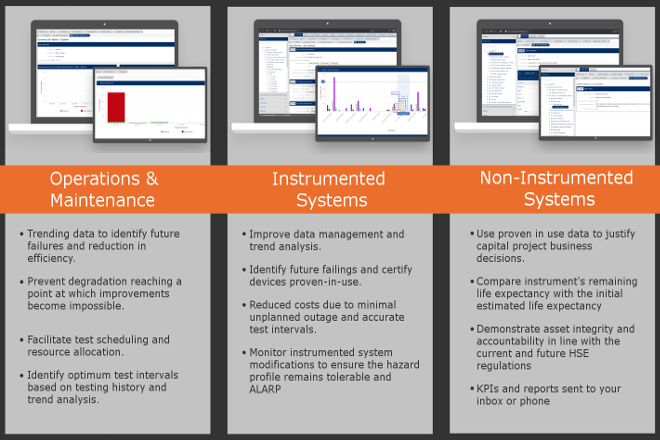

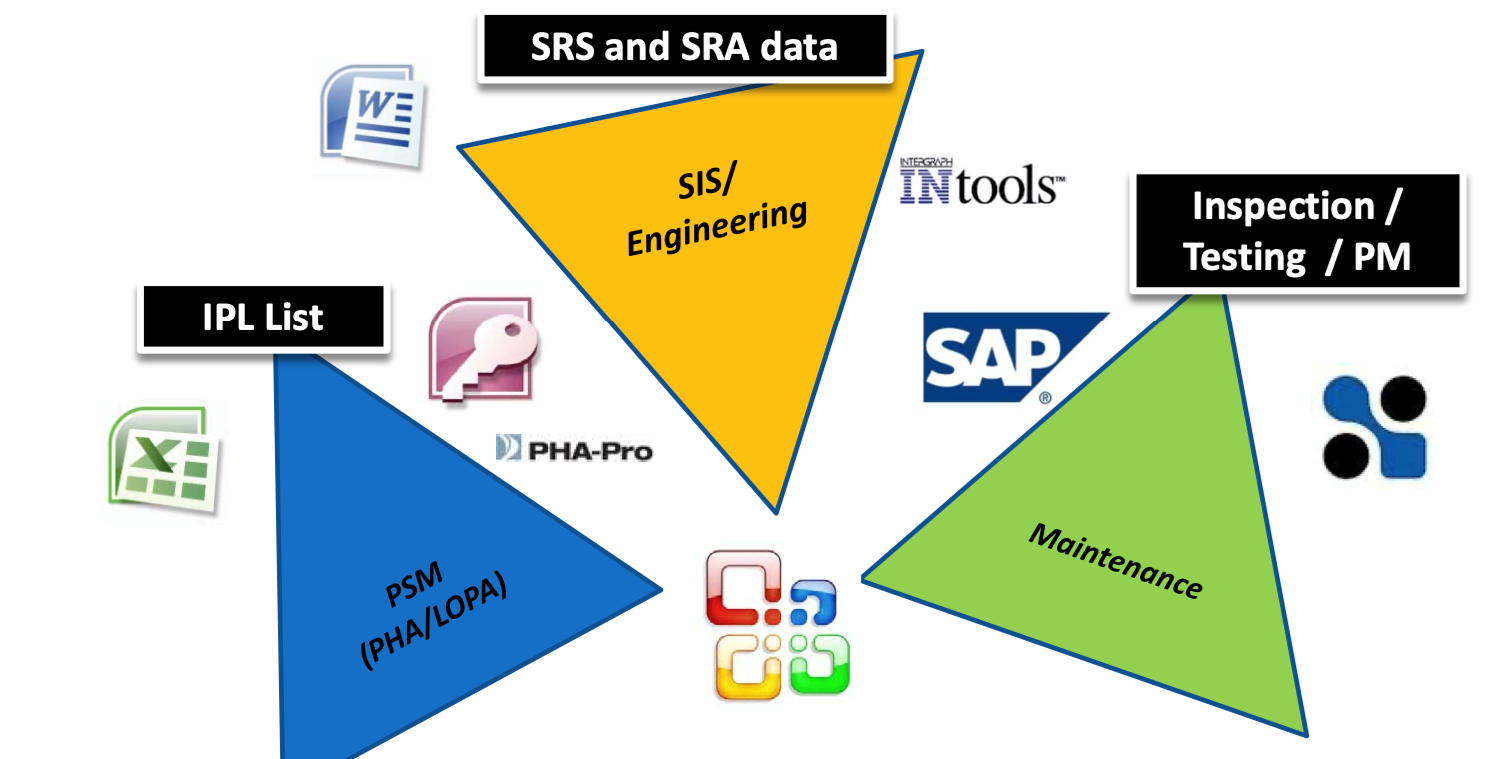

In the human body, blood transports oxygen absorbed through your lungs to your body’s cells with assistance from your heart, while the kidneys are continuously filtering the same blood for impurities. In this example, three systems (heart, kidneys, lungs) are working together to ensure adequate maintenance of the body. Much like the human body, the process industry is complex and requires multiple systems working together simultaneously to achieve their goal. If any system were to break, it would result in reduced performance and possibly, eventual failure. These data integrity challenges are very similar, regardless of whether tasked with designing a new site or maintaining existing facilities.

Chemical plants, refineries, and other process facilities maintain multiple documents that are required to operate the facility safely. Any challenges with maintaining these documents and work processes could result in process upsets, injuries, downtime, production loss, environmental releases, lost revenue, increased overhead, and many more negative outcomes. Below are just a small example of the critical documents that must be updated to reflect actual engineering design:

|

|

|

|

|

|

|

|

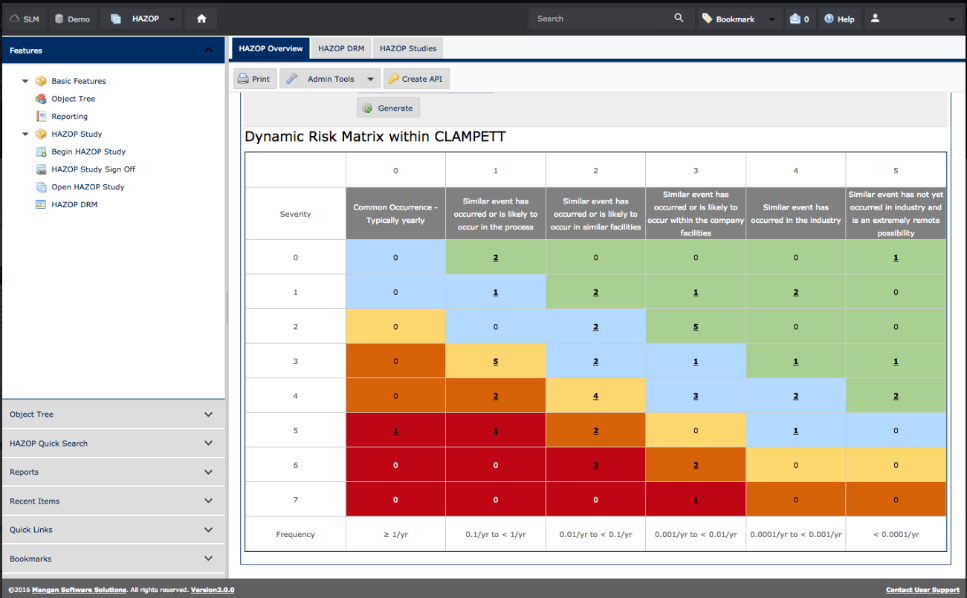

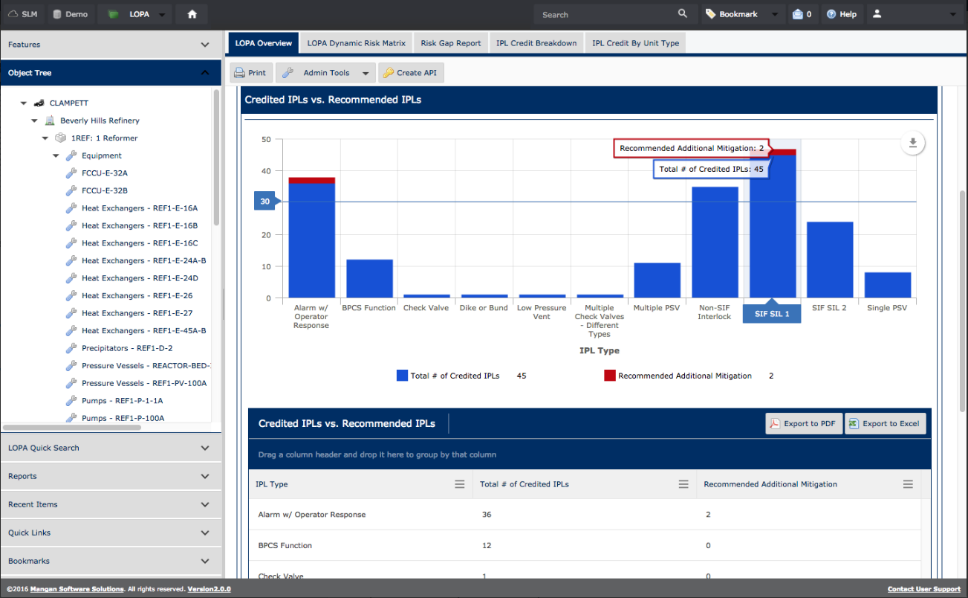

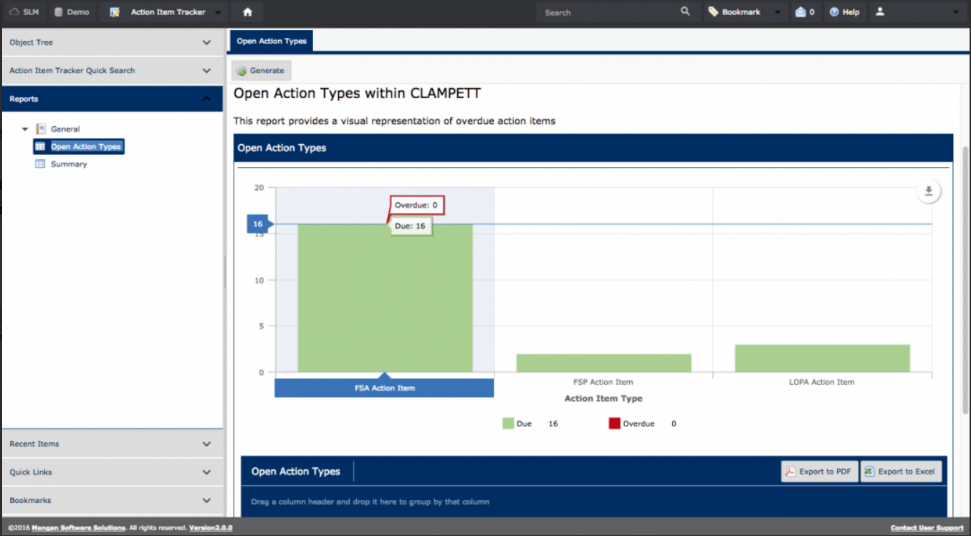

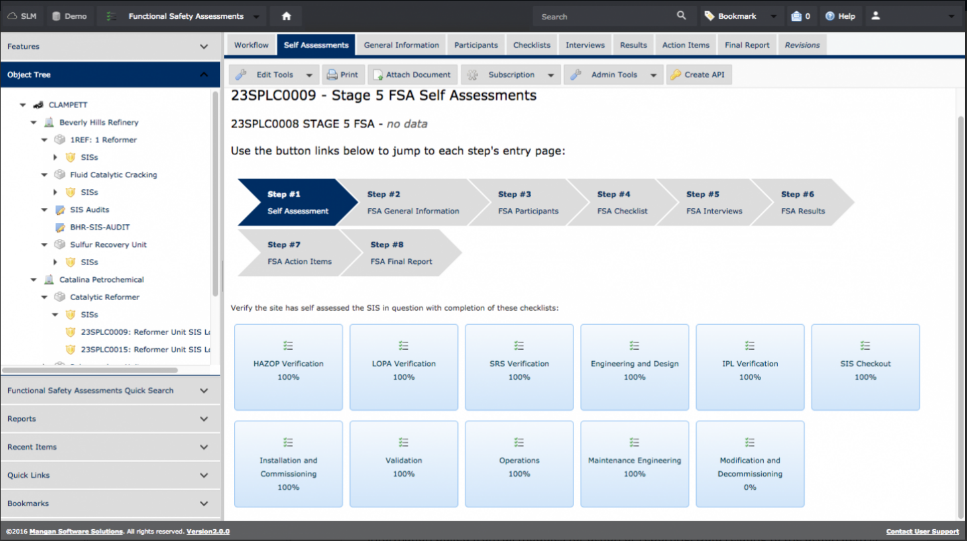

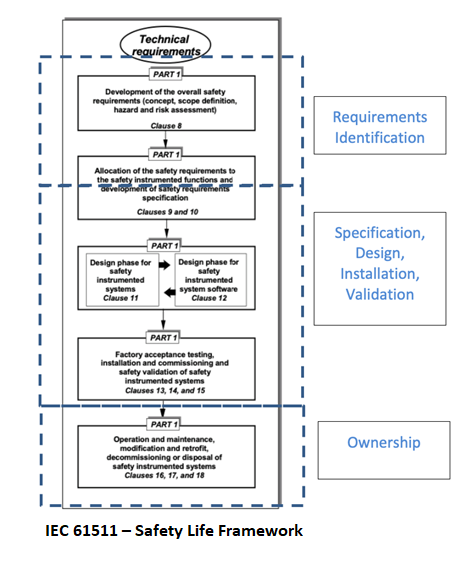

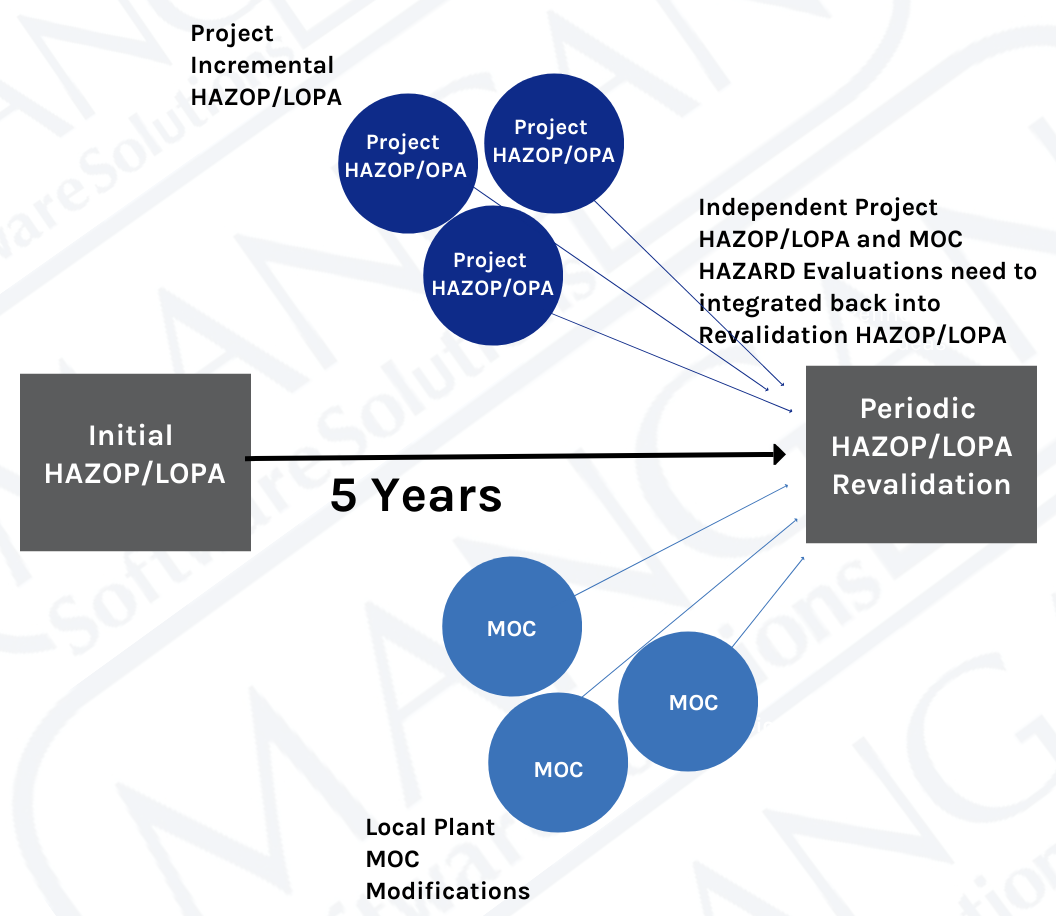

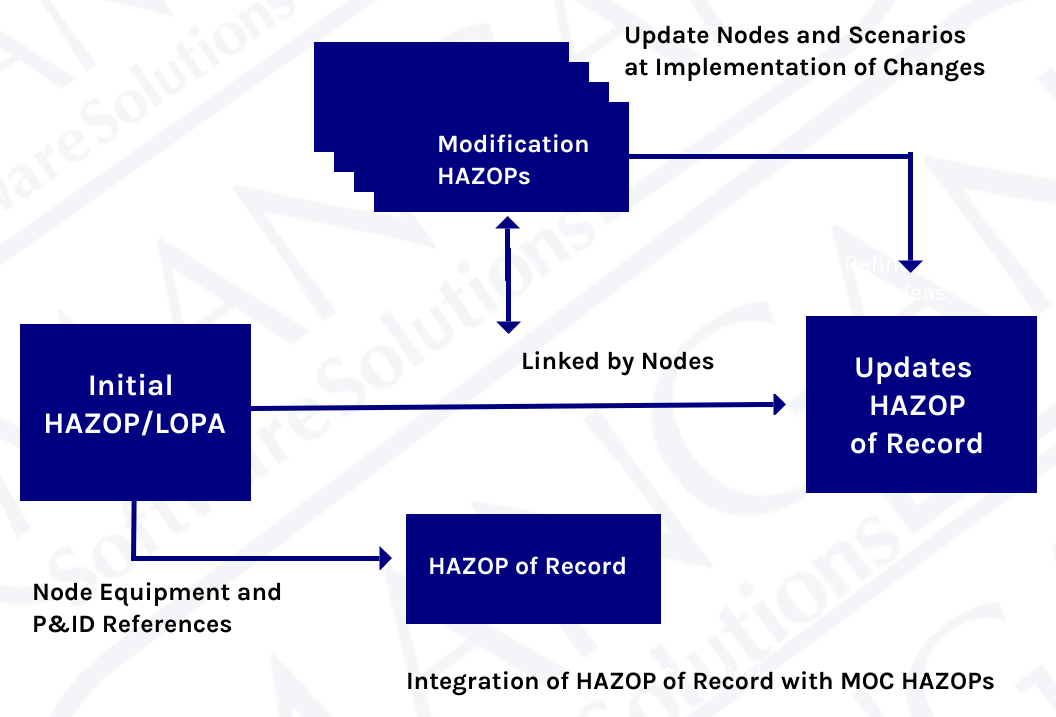

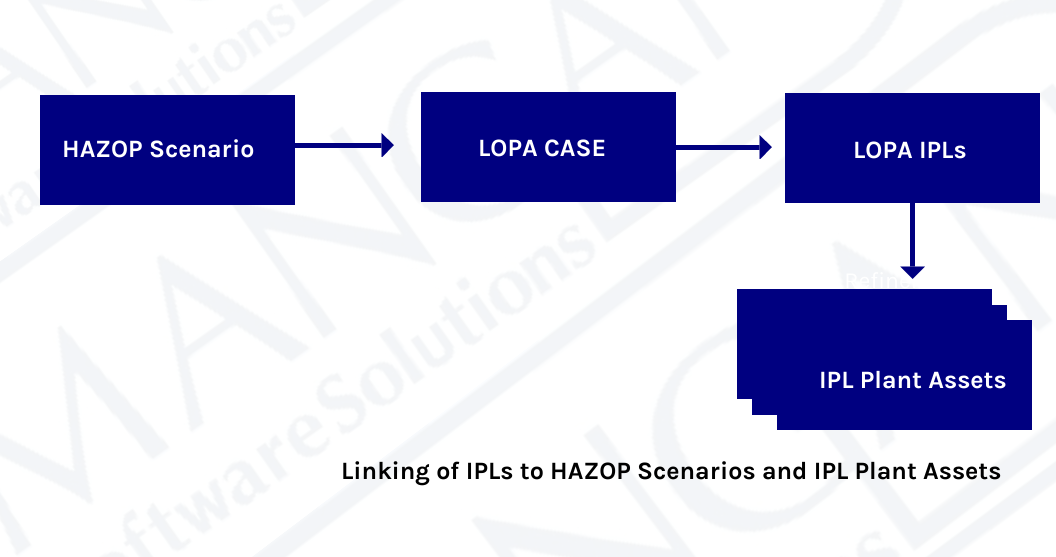

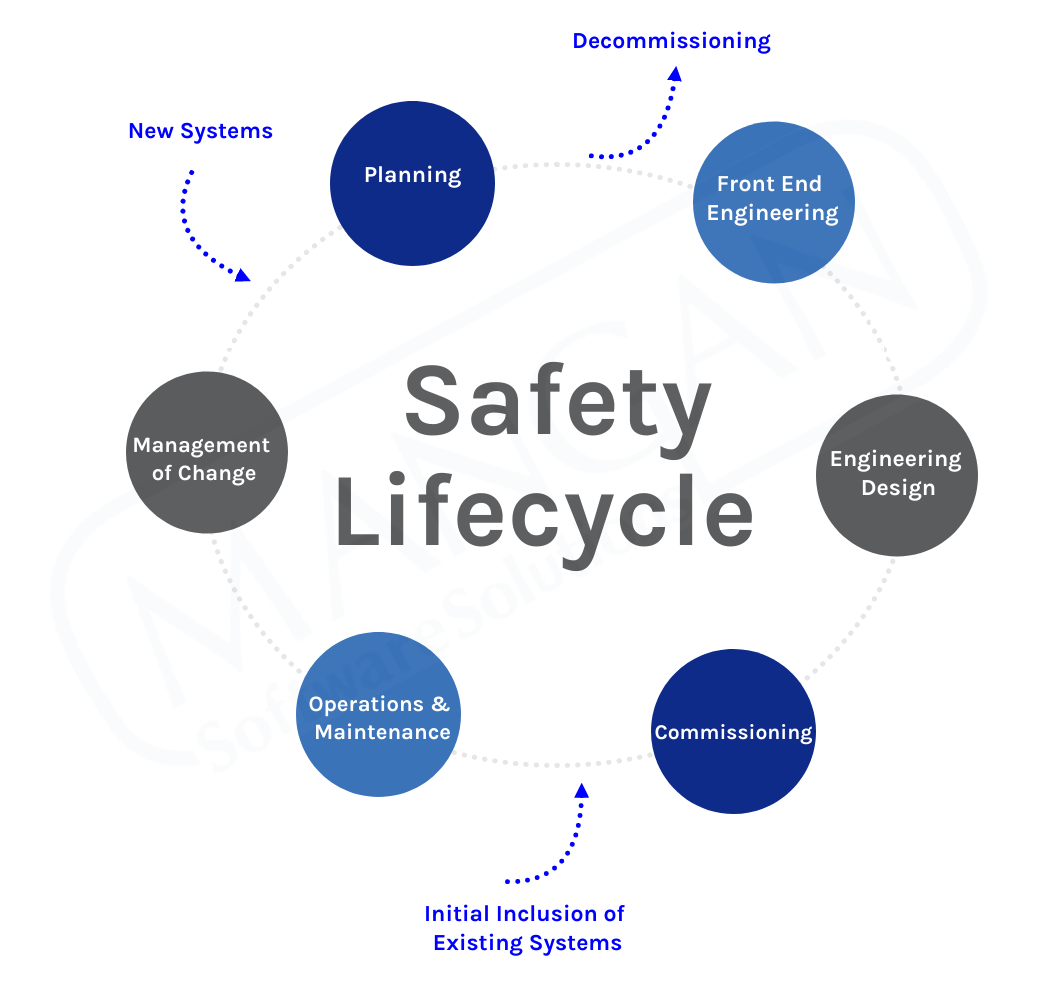

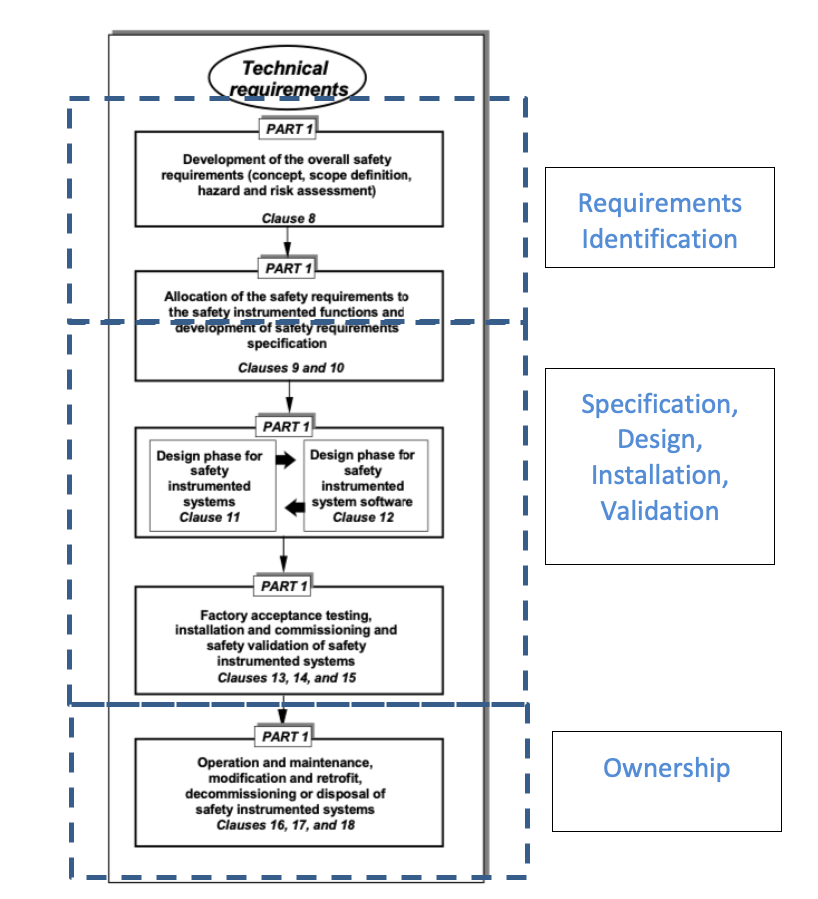

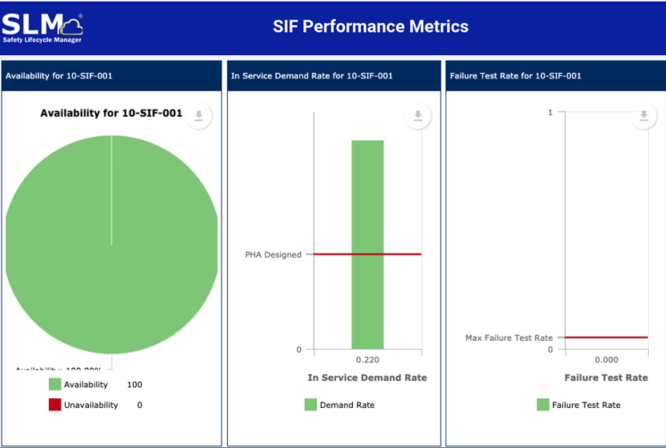

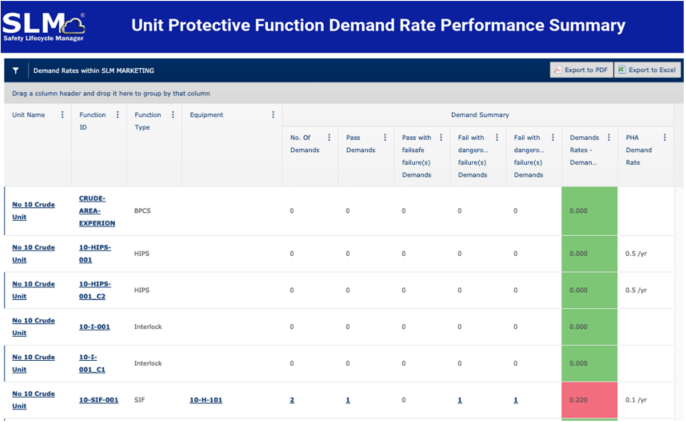

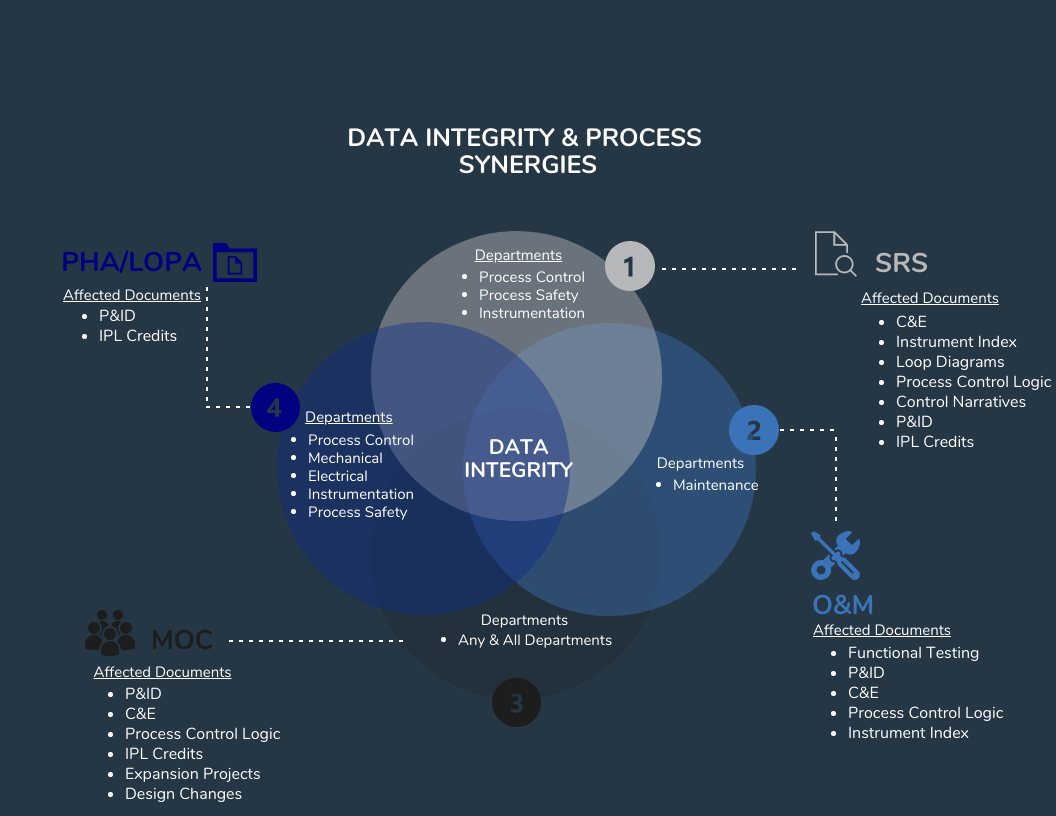

There are many processes and workflows that may trigger required changes to the above documentation, such as PHAs, LOPAs, HAZOPs, MOCs, SRSs, Maintenance Events, and Action Items, to name a few. Each of these processes requires specific personnel from multiple groups to complete. As the example earlier in this blog pointed out, it can be a challenge to communicate efficiently and effectively in a small group, much less across multiple groups and organizations. Data integrity can easily be compromised by having multiple processes and multiple workgroups involved in decisions affecting multiple documents.

Considerations

When starting a new project or becoming involved in a new process, it is essential to consider how the requested changes will affect other workgroups and their respective documentation. Will your change impact others? Could understanding how your changes affect other data and workgroups minimize rework or prevent incidents? Could seeing the full picture help you to make better decisions for your work process? Below are some approaches to consider to improve data integrity and communication in your workspace:

- Understand how changes you make may affect others

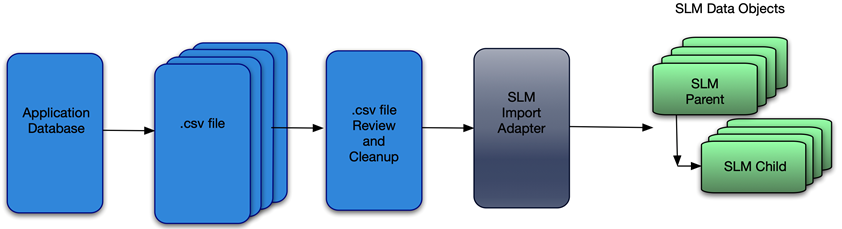

- Identify duplicated data that exist across multiple databases or files

- Look for ways to consolidate data and processes

- Create Procedures to audit required changes

- Designate Systems of Record (SOR) for all data

- Implement roles to follow guidelines and maintain integrity and communication