What Went Wrong With The Process Safety System? Lessons From The 737 Max Crash

When there is a major accident somewhere, you have to investigate what errors might have been made that contribute to it. Boeing’s problem with the 737 Max crash highlights a few fundamental issues with the process safety system that need to be examined.

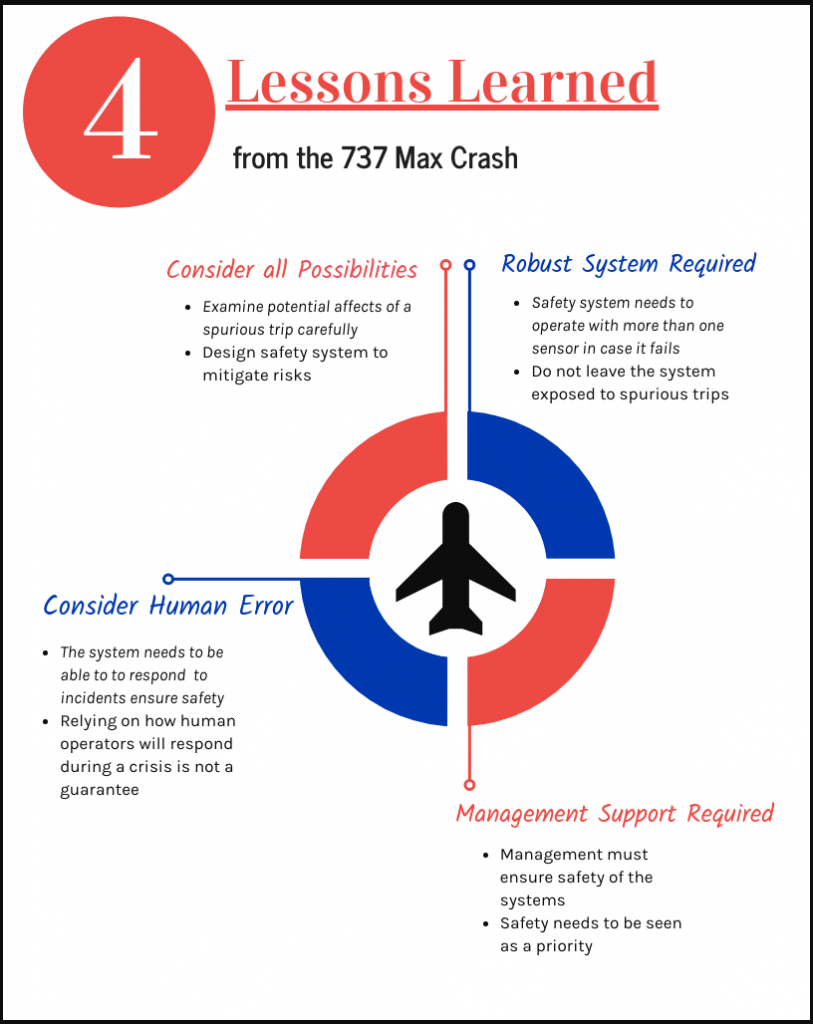

4 Lessons Learned:

1.) An extremely important part of the specification of a Process Safety System is to seriously consider the effects of a spurious trip on the overall safety of a process. They really should be designed to keep you out of trouble, and not to put you into it. If a spurious trip could at any point drive a process to an unsafe condition, there needs to be some careful thinking about how that unsafe condition can be avoided. In the 737 Max case, there are indications that operation of the Maneuvering Characteristics Augmentation System (MCAS) at low altitudes may have not been examined as carefully as it should have been.

2.) The second issue is the lack of a robust system. From reports so far, it appears that the MCAS operated based on only one sensor, which made the system much more exposed to a spurious trip. The failure of the one and only sensor resulted in behavior that drove two planes into the ground. When designing a Process Safety System that could have unsafe behavior if a spurious trip occurs, having a robust system is really important in order to cope with any potential errors. Designing a system that prevents the airplane from going down extremely fast deserves more than one sensor. From the reports this appears to have finally dawned on Boeing’s engineers after two crashes in 5 months.

3.) This might not entirely be the engineer’s fault who designed the system- there was a second sensor known to be available as an option. This suggests managers could have possibly tried to force the unsafe systems to save some money.

4.) The last issue is relying on people that operate the plane rather than the safety system itself. That only leaves room for human error. This suggests a robust system wasn’t not required because the pilots were expected to be able to turn off the system if they needed to. This appears to have been successful in some of the reported incidents from US airlines. However, in the actual crashes that occurred, it is being discussed that the flight crews either could not or did not turn off the system. There is some speculation that their training wasn’t sufficient, but in any case, people under a lot of stress tend to forget things and make mistakes.

People can’t be expected to respond to unexpected events reliably. It’s worse if they haven’t been well trained, or it’s been a long time since they were trained. Expecting operator response comes with a burden to train well and train often.

Summary:

The 737 Max crashes are a stark reminder that when designing a safety system responsible for the lives of people, it deserves healthy portions of realism, pessimism, and all around potential risk consideration. You really need to spend time thinking before deciding that a design is acceptable even if there are other pressures from management.

Rick has a BS in Chemical Engineering from the University of California, Santa Barbara and is a registered Professional Control Systems Engineer in California and Colorado. Rick has served as a member and chairman of both the API Subcommittee for Pressure Relieving Systems and the API Subcommittee on Instrumentation and Control Systems.